Introduction

The Scottish Highland Clearances were a turbulent and transformative time in Scotland’s history, marked by the displacement of thousands of Highlanders from their ancestral lands. It’s a tale of ambition, greed, and the struggle for survival, as the traditional way of life was uprooted in the name of progress. Join me as we delve into this fascinating yet tragic chapter of Scottish history, exploring its causes, consequences, and the resilient spirit of the Highland people.

A Brief Background on the Highlands

Before we dive into the Clearances, it’s essential to understand the rich cultural tapestry of the Scottish Highlands. The Highlands were home to a vibrant community where land and family were intertwined, and traditional practices, such as farming and clan loyalty, shaped daily life. The landscape was rugged, beautiful, and often unforgiving, yet it was deeply cherished by its inhabitants.

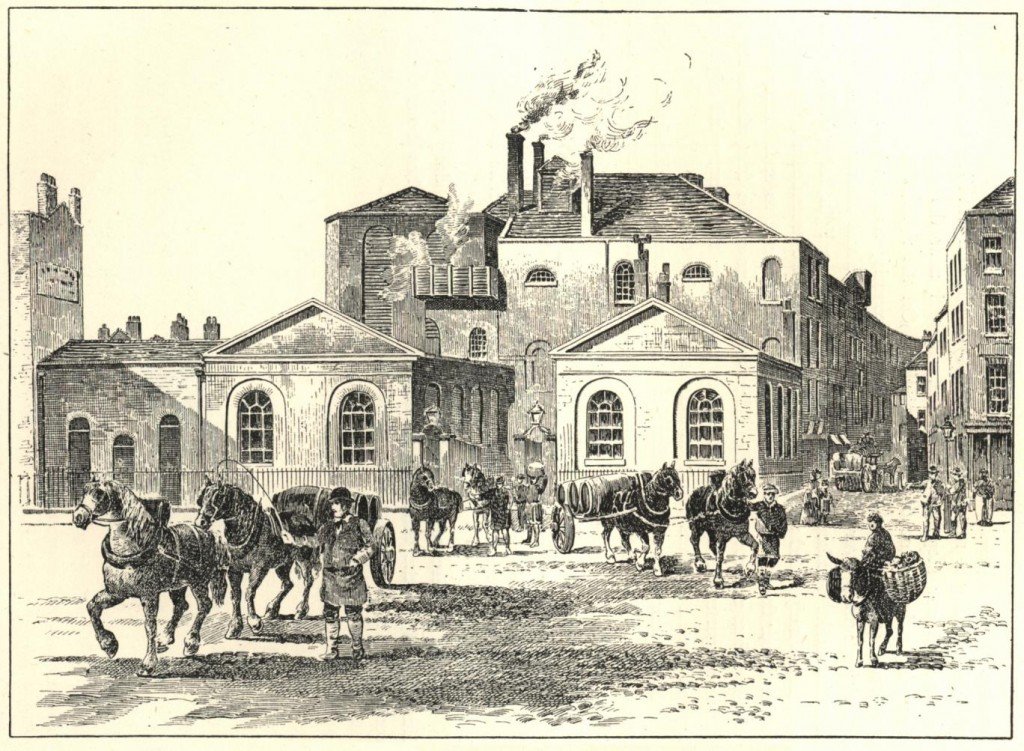

Life in the Highlands revolved around the land—clans would manage their estates collectively, with agriculture, fishing, and hunting forming the backbone of their economy. However, the 18th century brought about significant changes, primarily due to the growing demands of the industrial revolution and the British Empire.

The Seeds of Change: Why the Clearances Happened

As the 18th century progressed, a series of socio-economic changes began to take root. The demand for agricultural products increased, and landowners began to shift their focus from subsistence farming to more profitable ventures, particularly sheep farming. This shift was influenced by various factors, including:

Economic Pressures

The agricultural revolution brought innovation and new farming techniques. Landowners saw the potential for profit in raising sheep, which could yield wool to fuel the booming textile industry. This economic pressure led to a significant transformation of the Highland landscape, as traditional farms were torn down to make way for grazing lands.

Political Climate

The aftermath of the Jacobite risings in the mid-18th century left the Scottish Highlands in a state of political unrest. The British government sought to consolidate control over Scotland, leading to the disbandment of clans and the erosion of traditional power structures. Landowners were incentivized to clear their lands of what they deemed “inefficient” tenants, including those who clung to the old ways.

Social Dynamics

With the growth of cities and industrial centers in Scotland and beyond, there was a rising demand for labor. Landowners believed that clearing Highlanders would not only increase their profits but also encourage displaced individuals to seek work in urban areas. They saw the Clearances as not just a financial decision, but a social one, shaping the future of Scotland.

The Clearances Begin

The Clearances didn’t happen overnight; they unfolded in a series of waves, often with great brutality. The process typically involved the eviction of tenants, sometimes with little or no notice, as landowners sought to maximize profits by converting arable land into sheep pastures.

Methods of Eviction

Evictions were carried out with ruthless efficiency. In many cases, tenants were simply given a letter of eviction, sometimes with a mere few weeks to vacate. Those who refused to leave faced the threat of violence or the destruction of their homes. The emotional toll on communities was enormous, as families were torn apart and entire villages were abandoned.

The Role of Agents

Landowners often employed agents to oversee the Clearances, and these individuals were tasked with enforcing eviction orders. Many agents were ruthless, driven by financial incentives and often lacking empathy for the Highlanders. Their methods could be brutal, leading to widespread suffering among the displaced.

The Exodus Begins

As the Clearances progressed, many Highlanders found themselves without a home, and the only option was to leave their beloved land. This led to mass migrations, both within Scotland and beyond.

Emigration to America and Beyond

Large numbers of Highlanders sought refuge in Canada, the United States, and Australia. They hoped to find a new life, yet the journey was fraught with peril. Many faced difficult conditions at sea, and upon arrival in their new homes, they encountered challenges in integrating into foreign societies.

The Impact on Communities

The Clearances devastated Highland communities. Traditional social structures crumbled, and the cultural heritage that had been preserved for generations began to fade. Additionally, the loss of land meant a loss of identity for many Highlanders, leading to a feeling of disconnection from their roots.

The Aftermath: A New Way of Life

While the Clearances reshaped the Highlands in profound ways, they did not extinguish the spirit of the Highland people. Instead, they adapted and found new ways to express their identity.

Cultural Resilience

Despite the hardships, Highlanders maintained cultural practices, such as music, storytelling, and traditional dress. The Highland games and ceilidhs (gatherings with music and dance) continued to thrive, promoting a sense of community even in foreign lands.

Political Awakening

The injustices faced during the Clearances sparked a political awakening among the Highlanders. Over time, movements began to advocate for land reform and the rights of rural communities. The legacy of the Clearances ultimately contributed to a greater awareness of social justice issues in Scotland.

The Legacy of the Clearances

Today, the Clearances are viewed as a dark chapter in Scottish history, but they have also become a symbol of resilience and the fight for rights. Various memorials and historical sites throughout the Highlands pay tribute to those who suffered during this period.

Historical Reflection

As we reflect on this period, it’s essential to acknowledge its complexity. While some landowners may have been driven by profit, many also believed they were contributing to progress. However, the repercussions for the Highland people were profound and lasting.

Cultural Revival

In recent years, there has been a revival of interest in Highland culture, language, and traditions. Organizations are dedicated to preserving the heritage that was nearly lost during the Clearances, and initiatives aimed at revitalizing the Gaelic language are gaining traction.

Conclusion

The Scottish Highland Clearances were more than just a series of evictions; they were a profound upheaval of a way of life that shaped the identity of a people. The legacy of this time continues to resonate in modern Scotland, reminding us of the resilience of the Highlanders and their enduring connection to the land. As we learn from the past, we must strive to honor and celebrate the culture that has survived against all odds, ensuring that the lessons of history are never forgotten.